Java Get Line From File and Being Able to Get It Again

Using Java to Read Actually, Really Big Files

This is the kind of stuff Java was made for.

Anyone who knows me fairly well in my programming life will know that I'm not fractional equally to Java.

I'thou a JavaScript developer kickoff and foremost. It was what I learned first, information technology confounded so delighted me after I started to get the hang of it, and information technology made a heck of a lot more sense to me than Java, with its compilation, its need to declare every single variable blazon (yeah, I know the latest versions of Java have done abroad with this requirement for some of the simpler inferences), and its massive libraries of maps, lists, collections, etc.: HashMaps, Maps, HashTables, TreeMaps, ArrayLists, LinkedLists, Arrays, it goes on and on.

That being said, I do make an endeavor to get ameliorate at Coffee and the other shortcomings I have past not having a traditional computer science degree. If y'all'd like to know more about my singular path to becoming a software engineer, y'all can read my first web log post here.

So when I told a colleague of mine nearly a coding claiming I'd come up across a couple months back and how I solved information technology (so operation tested my various solutions) in JavaScript, he looked dorsum at me and said: "How would you solve it using Java?"

I stared at him equally the wheels began to turn in my head, and I accepted the challenge to find an optimal solution in Coffee, every bit well.

So before I get downwardly to how I solved my challenge using Java, let me actually recap the requirements.

The Challenge I Faced

As I said in my original commodity, on using Node.js to read really, actually large files, this was a coding challenge issued to another developer for an insurance technology company.

The claiming was straightforward enough: download this large zip file of text from the Federal Elections Commission, read that data out of the .txt file supplied, and supply the following info:

- Write a program that volition print out the total number of lines in the file.

- Discover that the 8th column contains a person'due south proper name. Write a program that loads in this data and creates an array with all proper noun strings. Print out the 432nd and 43243rd names.

- Detect that the 5th column contains a class of date. Count how many donations occurred in each month and print out the results.

- Notice that the 8th column contains a person'due south proper noun. Create an array with each first name. Place the most common first name in the data and how many times information technology occurs.

Link to the data: https://www.fec.gov/files/bulk-downloads/2018/indiv18.null*

Note: I add the asterisk after the file link because others who have chosen to undertake this challenge themselves accept really seen the file size increase since I downloaded it back in the beginning of October of 2018. At last count, someone mentioned it was up to three.5GB now, then it seems as if this data is even so live and being added to all the time. I believe the solutions I present below will still piece of work though, only your counts and numbers will vary from mine.

I liked the challenge and wanted some exercise manipulating files, so I decided to see if I could figure it out. At present, without farther ado, allow's talk about some dissimilar solutions I came upwards with to read really, actually large files in Java.

The Three Java Solutions I Came Upwardly With

Coffee has long been a standard programming language with file processing capabilities, and as such, there'south been a large number of ever improving ways to read, write and dispense files with it.

Some methods are baked straight into the core Coffee framework, and some are nonetheless contained libraries that need to be imported and bundled together to run. Only regardless, I came up with three different methods to read files in Java, and then I performance tested them to run into which methods were more efficient.

Below are Polacode formatted screenshots of all my lawmaking along with snippets of diverse pieces of lawmaking, if you lot'd like to see all of the original code, you can access my Github repo here.

You'll observe that I used the same code logic to extract the data from each file, the major differences are betwixt the initial file connection and text parsing. I did this and so that I could have a more accurate idea of how the unlike methods stacked up against each other for the performance part of my evaluation. Apples to apples comparisons and all that.

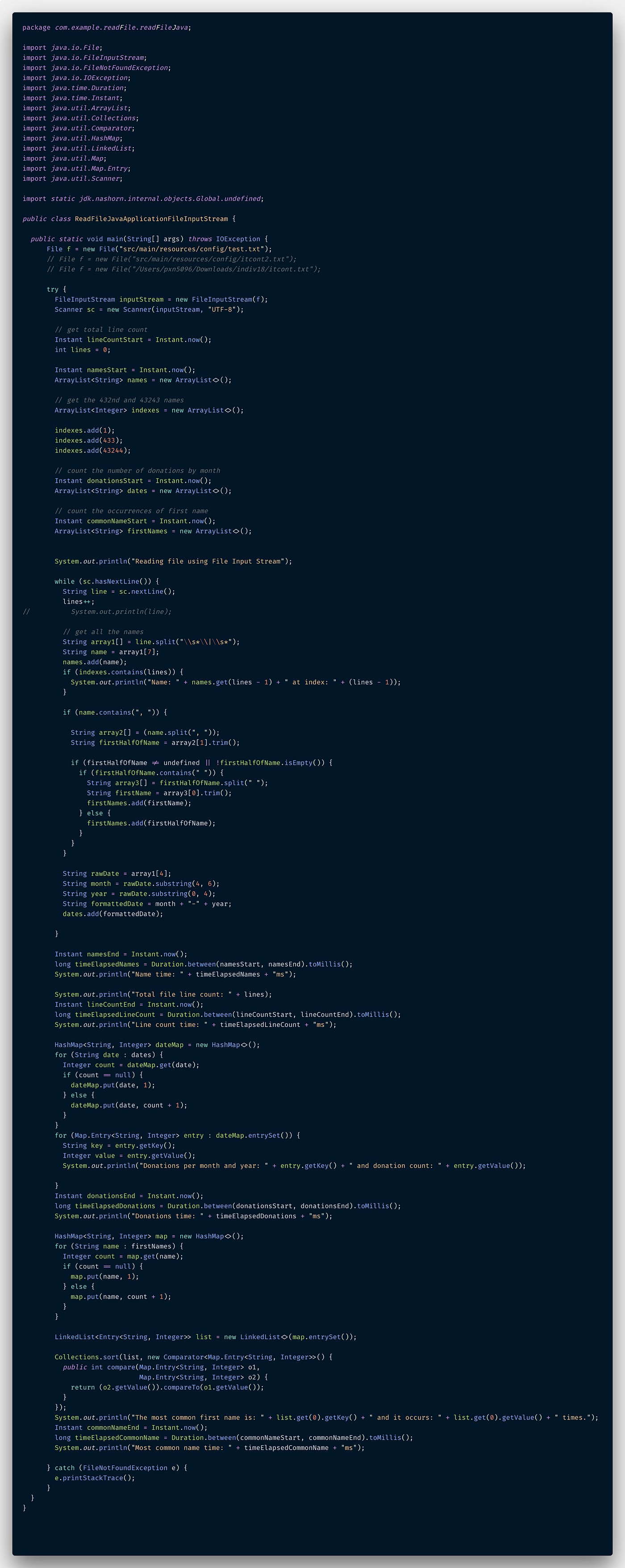

Java FileInputStream() & Scanner() Implementation

The first solution I came up with uses Java'south congenital-in FileInputStream() method combined with Scanner().

In essence, FileInputStream but opens the connection to the file to be read, be information technology images, characters, etc. It doesn't particularly care what the file really is, because Java reads the input stream as raw bytes of data. Another option (at least for my example) is to utilise FileReader() which is specifically for reading streams of characters, but I went with FileInputStream() for this particular scenario. I used FileReader() in another solution I tested subsequently

Once the connection to the file is established, Scanner comes into play to really parse the text bytes into strings of readable data. Scanner() breaks its inputs into tokens using a delimiter blueprint, which by default matches whitespace (but can also be overridden to employ regex or other values). And then, past using the Scanner.hasNextLine() boolean, and the Scanner.nextLine() method, I can read the contents of the text file line by line and pull out the pieces of information that I need.

Scanner.nextLine() actually advances this scanner past the current line and returns the input that was skipped, which is how I'm able to gather the required info from each line until in that location's no more lines to read and Scanner.hasNextLine() returns false and the while loop ends.

Hither'southward a sample of code usingFileInputStream() and Scanner().

File f = new File("src/main/resources/config/test.txt");try {

while (sc.hasNextLine()) {

FileInputStream inputStream = new FileInputStream(f);

Scanner sc = new Scanner(inputStream, "UTF-8");

do some things ...

String line = sc.nextLine();

do some more things ...

} do some concluding things

}

And here is my full code to solve all the tasks fix out above.

After the file's data is beingness read one line at a time, information technology'due south just a matter of getting the necessary data and manipulating it to fit my needs.

Job 1: Get the File's Total Line Count

Getting the line count for the unabridged file was piece of cake. All that involved was a new int lines = 0 declared outside the while loop, which I incremented each time the loop ran again. Request #i: done.

Job 2: Create a Listing of all the Names and Find the 432nd and 43243rd Names

The second request, which was to collect all the names and print the 432nd and 43243rd names from the array, required me to create an ArrayList<Cord> names = new ArrayList<>(); and an ArrayList<Integers> indexes = new ArrayList<>(); which I promptly added the indexes of 432 and 43243 with indexes.add(433) and indexes.add together(43244), respectively.

I had to add together one to each index to get the right name position in the assortment considering I incremented my line count (starting at 0) as soon as Scanner.hasNextLine() returned true. After Scanner.nextLine() returned the previous line's contents I could pull out the names I needed, which meant its true alphabetize (starting from index 0) was actually the index of the line count minus i. (Trust me, I triple checked this to brand sure I was doing my math correctly).

I used an ArrayList for the names because information technology maintains the elements insertion order which means while displaying ArrayList elements the result set volition always have the same order in which the elements got inserted into the List. Since I'm iterating through the file line past line, the elements volition ever exist inserted into the list in the same guild.

Here'southward the full logic I used to get all the names and then print out the names if the indexes I had in my indexes ArrayList matched the lines count index.

int lines = 0; ArrayList<String> names = new ArrayList<>();// go the 432nd and 43243 names

ArrayList<Integer> indexes = new ArrayList<>();indexes.add(433);

indexes.add(43244);System.out.println("Reading file using File Input Stream");

while (sc.hasNextLine()) {

// get all the names

Cord line = sc.nextLine();

lines++;

String array1[] = line.split("\\due south*\\|\\s*");

String proper noun = array1[vii]; names.add(proper name);

if (indexes.contains(lines)) {

System.out.println("Name: " + names.get(lines - one) + " at

index: " + (lines - 1));

}

...

}

Request #2: done.

Task 3: Count How Many Donations Occurred in Each Month

Every bit I approached the donation counting request, I wanted to do more than count donations by month, I wanted to count them by both calendar month and year, as I had donations from both 2017 and 2018.

The very first affair I did was set up an initial ArrayList to hold all my dates: ArrayList<String> dates = new ArrayList<>();

So, I took the 5th element in each line, the raw date, and used the substring() method to pull out just the month and year for each donation.

I reformatted each date into easier-to-read dates and added them to the new dates ArrayList.

String rawDate = array1[4];

String month = rawDate.substring(4, half dozen);

String year = rawDate.substring(0, four);

Cord formattedDate = month + "-" + year;

dates.add(formattedDate); After I'd collected all the dates, I created a HashMap to concord my dates: HashMap<Cord, Integer> dateMap = new HashMap<>();, and and so looped through the dates list to either add the dates as keys to the HashMap if they didn't already exist or increment their value count, if they did exist.

Once the HashMap was made, I ran that new map through some other for loop to go each object's key and value to impress out to the console. Voilà.

The date results were not sorted in whatsoever particular order, only they could be by transforming the HashMap back in to an ArrayList or LinkedList, if need exist. I chose not to though, because it was non a requirement.

HashMap<String, Integer> dateMap = new HashMap<>();

for (String date : dates) {

Integer count = dateMap.go(date);

if (count == null) {

dateMap.put(date, 1);

} else {

dateMap.put(date, count + 1);

}

} for (Map.Entry<String, Integer> entry : dateMap.entrySet()) {

String primal = entry.getKey();

Integer value = entry.getValue();

System.out.println("Donations per month and yr: " +

entry.getKey() + " and donation count: " + entry.getValue());}

Request #3: done.

Task 4: Identify the Most Mutual Start Name & How Oft It Occurs

The fourth request, to become all the first names but and find the count of the most ordinarily occurring one, was trickiest.

It required me to starting time, check if the names array independent a comma (there were some business names that had no commas), and so split() the proper name on the comma and trim() any extraneous white space from it.

Once that was cleaned upwards, I had to check if the first half of the name had any white spaces (meaning the person had a get-go name and center name or possibly a moniker like "Ms.") and if it did, split() it again, and trim() upwards the commencement element of the newly made array (which I presumed would nearly always be the starting time proper noun).

If the get-go half of the proper noun didn't have a space, it was added to the firstNames ArrayList every bit is. That's how I collected all the first names from the file. Meet the code snippet below.

// count the occurrences of first name

ArrayList<String> firstNames = new ArrayList<>();Arrangement.out.println("Reading file using File Input Stream");

while (sc.hasNextLine()) {

String line = sc.nextLine();

// get all the names

Cord array1[] = line.split("\\due south*\\|\\s*");

String proper noun = array1[7];

names.add together(name);

if (name.contains(", ")) {

Cord array2[] = (proper noun.split(", "));

String firstHalfOfName = array2[1].trim();

if (firstHalfOfName != undefined ||

!firstHalfOfName.isEmpty()) {

if (firstHalfOfName.contains(" ")) {

String array3[] = firstHalfOfName.split(" ");

String firstName = array3[0].trim();

firstNames.add together(firstName);

} else {

firstNames.add(firstHalfOfName);

}

}

}

Once I've collected all the beginning names I can, and the while loop reading the file has ended, it's fourth dimension to sort the names and find the nearly common one.

For this, I created another new HashMap: HashMap<String, Integer> map = new HashMap<>();, and so looped through all the names and if the proper noun didn't exist in the map already, information technology was created every bit the map's key and the value was set as 1. If the name already existed in the HashMap, the value was incremented by 1.

HashMap<String, Integer> map = new HashMap<>();

for (String name : firstNames) {

Integer count = map.become(name);

if (count == nada) {

map.put(name, ane);

} else {

map.put(name, count + 1);

}

} But look — there'southward more! In one case nosotros have the HashMap, which is unordered by nature, it needs to exist sorted from largest to smallest value to go the most commonly occurring offset name, so I transform each entry in the HashMap into a LinkedList, which can be ordered and iterated through.

LinkedList<Entry<String, Integer>> list = new LinkedList<>(map.entrySet()); And finally, the list is sorted using the Collections.sort() method, and invoking the Comparator interface to sort the proper name objects co-ordinate to their value counts in descending lodge (highest value is first). Check this out.

Collections.sort(list, new Comparator<Map.Entry<String, Integer>>()

{

public int compare(Map.Entry<Cord, Integer> o1,

Map.Entry<String, Integer> o2) {

render (o2.getValue()).compareTo(o1.getValue());

}

}); Once all of that has been done, the first key value pair of the LinkedList can finally be pulled out and displayed to the user. Here'southward the whole shebang that's put together in one case the all the starting time names have been read out of the file.

HashMap<Cord, Integer> map = new HashMap<>();

for (String name : firstNames) {

Integer count = map.go(name);

if (count == zero) {

map.put(name, 1);

} else {

map.put(proper noun, count + 1);

}

}LinkedList<Entry<Cord, Integer>> list = new LinkedList<>(map.entrySet());

Collections.sort(list, new Comparator<Map.Entry<String, Integer>>()

System.out.println("The most common showtime name is: " + list.go(0).getKey() + " and it occurs: " + list.get(0).getValue() + " times.");

{

public int compare(Map.Entry<String, Integer> o1,

Map.Entry<String, Integer> o2) {

return (o2.getValue()).compareTo(o1.getValue());

}

});

Request #iv (and arguably the most complicated of all the tasks): done.

Great, at present that I've given you the soliloquy of my brain'southward logic in Java, I can give yous much quicker overviews of the other two methods I tried for reading the text data from the files. (Considering the logic portion of the lawmaking is exactly the aforementioned.)

Java BufferedReader() & FileReader() Implementation

My second solution involved two more of Coffee's core methods: BufferedReader() and FileReader().

BufferedReader reads text from a grapheme-input stream, buffering characters so as to provide for the efficient reading of characters, arrays, and lines, and it is wrapped around the FileReader method, which is the actual method reading the specified text file. The BufferedReader makes the FileReader more efficient in its operation, that's all.

BufferedReader's method readLine() is what actually reads dorsum each line of the text as it is read from the stream, assuasive us to pull out the data needed.

The setup is like to FileInputStream and Scanner; you tin can see how to implement BufferedReader and FileReader below.

File f = new File("src/chief/resources/config/test.txt");try (BufferedReader b = new BufferedReader(new FileReader(f))) {

while ((readLine = b.readLine()) != naught) {

String readLine = "";

do some things ...

do some more things...

} practise some concluding things

}

And here is my total code using BufferedReader() and FileReader().

Besides the BufferedReader and FileReader implementation, though, all the logic inside is the aforementioned, so I'll move on now to my terminal Coffee file reader implementation: FileUtils.LineIterator.

Apache Commons IO FileUtils.LineIterator() Implementation

The last solution I came upwards with involves a library fabricated by Apache, chosen FileUtils.LineIterator(). It'southward like shooting fish in a barrel enough to include the dependency. I used Gradle for my Java project, and then all I had to practise was include the commons-io library in my build.gradle file.

The LineIterator, does exactly what its name suggests: it holds a reference to an open up Reader (like FileReader in my last solution), and iterates over each line in the file. And it'due south really easy to set up LineIterator in the first place.

LineIterator has a built-in method chosen nextLine(), which actually returns the next line in the wrapped reader (not unlike Scanner's nextLine() method or BufferedReader's readLine() method).

Here's the lawmaking to set FileUtils.LineIterator() once the dependency library has been included.

File f = new File("src/principal/resources/config/exam.txt");attempt {

while (it.hasNext()) {

LineIterator information technology = FileUtils.lineIterator(f, "UTF-8");

practice some things ...

String line = it.nextLine();

do some other things ...

} practice some final things

}

And hither is my full code using FileUtils.LineIterator().

Note: There is ane thing you need to be aware of if you're running a plain Coffee application without the aid of Leap Boot. If you desire to use this extra Apache dependency library y'all need to manually package information technology together with your application's JAR file into what's called a fat JAR.

A fat jar (also known as an uber jar) is a cocky-sufficient archive which contains both classes and dependencies needed to run an application.

Spring Boot 'automagically' bundles all of our dependencies together for u.s.a., simply it'southward too got a lot of extra overhead and functionalities that are totally unnecessary for this projection, which is why I chose not to utilise information technology. It makes the project unnecessarily heavy.

In that location are plugins available now, but I but wanted a quick and like shooting fish in a barrel style to bundle my i dependency up with my JAR. And so I modified the jar job from the Coffee Gradle plugin. By default, this job produces jars without any dependencies.

I can overwrite this behavior by adding a few lines of code. I just need two things to make information technology work:

- a Main-Form attribute in the manifest file (check, I had 3 main class files in my demo repo for testing purposes)

- and any dependencies jars

Thank you to Baeldung for the help on making this fat JAR.

Once the main class file has been defined (and in my demo repo, I made three chief class files, which confused my IDE to no stop) and the dependencies were included, you tin can run the ./gradlew assemble command from the concluding and then:

java -cp ./build/libs/readFileJava-0.0.1-SNAPSHOT.jar com.case.readFile.readFileJava.ReadFileJavaApplicationLineIterator

And your programme should run with the LineIterator library included.

If y'all're using IntelliJ as your IDE, y'all can also but use its run configurations locally with each of the main files specified every bit the correct principal class, and it should run the three programs equally well. See my README.md for more info on this.

Great, now I have three unlike ways to read and procedure large text files in Coffee, my adjacent mission: figure out which way is more performant.

How I Evaluated Their Performance & The Results

For operation testing my unlike Java applications and the functions within them, I came across two handy, ready-made functions in Java 8: Instant.now() and Duration.between().

What I wanted to do was run into if there was any measurable differences between the different means of reading the same file. So besides the different file read options: FileInputStream, BufferedReader and LineIterator, I tried to keep the code (and timestamps marker the start and stop of each function) as like as possible. And I recollect information technology worked out pretty well.

Instant.now()

Instant.now() does exactly what its name suggests: it holds a unmarried, instantaneous point on the timeline, stored as a long representing epoch-seconds and an int representing a nanosecond-of-seconds. This isn't incredibly useful all on information technology'southward own, simply when it's combined with Duration.betwixt(), it becomes very useful.

Elapsing.between()

Duration.between() takes a start interval and an end interval and finds the duration between those two times. That'south it. And that timing tin be converted to all sorts of different, readable formats: milliseconds, seconds, minutes, hours, etc.

Here's an case of implementing Instant.now() and Duration.between() in my files. This i is timing how long information technology takes to become the line count of the full file.

try {

LineIterator information technology = FileUtils.lineIterator(f, "UTF-8"); // get total line count

Instant lineCountStart = Instant.at present();

int lines = 0;

System.out.println("Reading file using Line Iterator");

while (it.hasNext()) {

String line = it.nextLine();

lines++;

}

Organisation.out.println("Total file line count: " + lines);

Instant lineCountEnd = Instant.now();

long timeElapsedLineCount =

Duration.between(lineCountStart, lineCountEnd).toMillis();

Organisation.out.println("Line count fourth dimension: " +

timeElapsedLineCount + "ms");

}

}

Results

Here's the results after applying Instant.now() and Duration.between() to all of my different file read methods in Java.

I ran all 3 of my solutions against the 2.55GB file, which contained just over xiii meg lines in total.

As you lot can see from the table, BufferedReader() and LineIterator() both fared well, but they're so shut in their timings it seems to be upwardly to the developer which they'd rather use.

BufferedReader() is dainty considering it requires no extra dependencies, but it is slightly more than circuitous to set upward in the beginning, with the FileReader() to wrap inside. Whereas LineIterator() is an external library but it makes iterating over the file extra easy later it is included every bit a dependency.

The percentage improvements are included at the end of the tabular array above likewise, for reference.

FileInputStream, in an interesting twist, got blown out of the water by the other ii. By buffering the data stream or using the library made specifically for iterating through text files, performance improved by about 73% on all tasks.

Beneath are the raw screenshots from my terminal for each of my solutions.

Solution #one: FileInputStream()

Solution #2: BufferedReader()

Solution #iii: FileUtils.lineIterator()

Determination

In the end, buffered streams and custom file read libraries are the about efficient means processing large data sets in Java. At least, for the large text files I was tasked with reading.

Thanks for reading my mail on using Java to read really, really big files. If you'd like to see the original posts on Node.js that inspired this one, y'all tin come across part 1, hither and part two, hither.

Cheque back in a few weeks, I'll be writing most Swagger with Express.js or something else related to web development & JavaScript, so please follow me so you don't miss out.

Cheers for reading, I promise this gives you an idea of how to handle large amounts of data with Java efficiently and performance test your solutions. Claps and shares are very much appreciated!

If you enjoyed reading this, you may also enjoy some of my other blogs:

- Using Node.js to Read Really, Really Large Datasets & Files (Pt ane)

- Continue Code Consequent Across Developers The Piece of cake Way — With Prettier & ESLint

- The Absolute Easiest Way to Debug Node.js — with VS Code

Source: https://itnext.io/using-java-to-read-really-really-large-files-a6f8a3f44649

0 Response to "Java Get Line From File and Being Able to Get It Again"

Post a Comment